We have already touched some of the basics when it comes down to Cutover strategy here, so now we’d love to switch gears a bit and move to data migration considerations and how it relates to the overall cutover strategy. This is something teams need to think about from the overall cutover perspective.

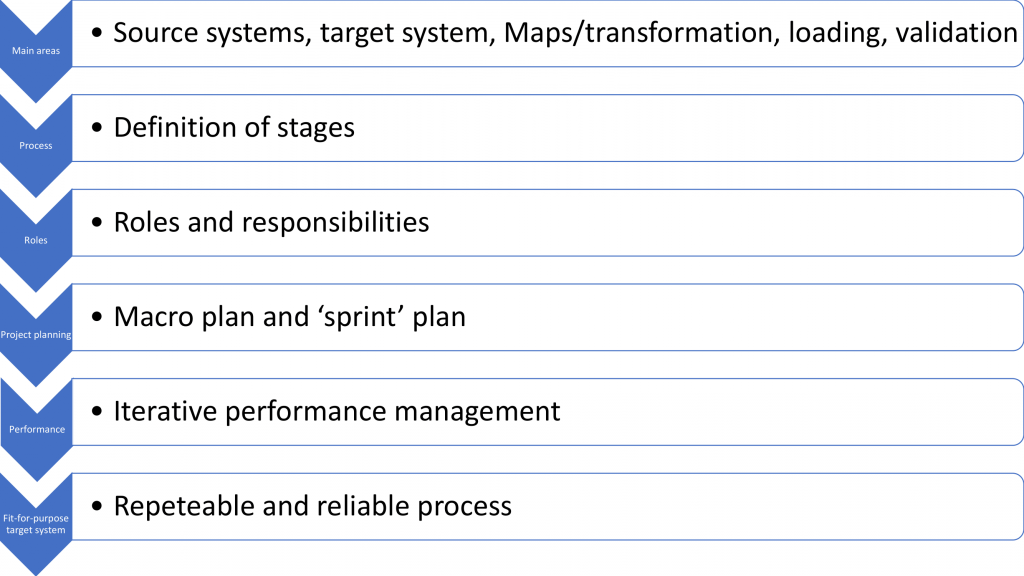

So here, our dear reader, we would like to walk you through the source systems, target systems, maps/transformations, and data loading.

P.S.: “Target System” is used deliberately rather than Dynamics 365 business application cause sometimes there’s more than one system that is going live at that point, so you may need to be thinking about some of the new systems to which you are integrating as well as the Dynamics 365 application.

Source Systems Data Management – Data Cleansing

So starting from the source area, data management and cleansing is probably the most important thing that we want to highlight including a couple of common risks that we have observed so far.

Number one is lack of data readiness which eventually delays go-lives. Having analyzed multiple different projects, we put lack of data readiness on top of the reasons teams experience delays to go live.

Another one would be the poor quality of migrated data causing issues going live as well. Because, once you have gone live and the business picks out the implications of bad data in the production system – it’s going to be a bit of a mess triggering further issues. Of course, it does not all come from bad data in the source system – there are multiple places when things can go wrong: in the transformation, configurations, and etc.

But based on our observations, most often critical issues tend to be traced all the way back to having bad data as a source that’s gone somehow through the engine and landed in the Dynamics application.

Also, as for mitigation considerations, make sure:

- The data cleansing (at source) is business-owned, very well defined, and managed with tooling and monitoring;

- Roles have been clearly defined; and

- The business involvement has been enforced.

And, finally, keep in mind what is the minimum data quality you need to meet in order to move it through the data migration engine flawlessly and error-free. You need to be quite tough in terms of these standards, because the amount of time on a project wasted trying to clean up the data in the engine, at the target system, maybe way too much, while the problem could have been solved at the source.

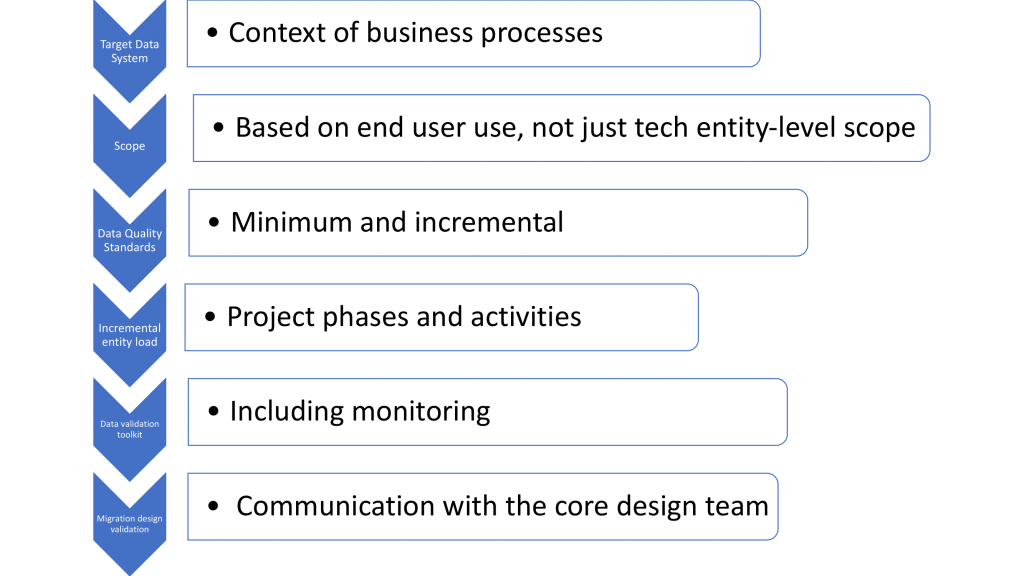

Target System

The next to talk about is the target system – Dynamics 365 apps particularly.

Once the target system starts receiving migrated data from the source, teams often realize that it may not sufficiently be usable by the system until it’s very late, since it even can come all the way down to the User Acceptance Testing. Such a problem would mean that the project can`t help the data migration team validate the data as it’s coming through.

As a consequence, people won’t take the time and the effort to actually validate it since it’s not usable. Hence, make sure that does not become a risk for your project and take specific means delivering usable data into the target system. Of course, its usability can be limited during the first iteration but you need the data to be fully usable much earlier than UAT.

One of the other things to consider is the scope of the migration, which is often defined in technical terms of data entities, a number of records, attributes, etc. as the target system is defined not just at that level, but also at the level of how it’s going to be used in the production system.

Always keep in mind what is the end-user once data arrives in the target system and what are the minimum standards needed to be met before it’s accepted into the Dynamics system.

Mapping and Transformations

Mapping and transformation often happen within the bowels of the data migration team and are not always visible for business users or even to the project team. There are hidden errors that may arrive all the way into the production system causing you to end up having an issue whereas something is not working in the production system.

It is strongly recommended to set transformation mapping rules, make them explicit and share with the business data owners so they have the idea of what is happening to the data, why and what you need to do with it, and how it affects the bigger picture.

Plan for incremental validation of mapping and transformation rules by the business. Again, you don’t have all the answers right from the beginning as the transformation engine, mapping tends to evolve as the project progresses. Hence, you want to have clear rules to move the data from the source to the target system.

Lastly, as for mitigation to recommend – do not run automated transformations where the rules are vague and business cannot give you a clear definition of how that transformation should work. It will end up creating more problems than it solves.

Data Loading

Last but not least, here are some recommendations on the loading of the data:

- Entity sequence definition must be set, including incremental loads for high volume entities;

- Ensure early validation;

- Best practices should be applied for loading data into the target system:

- Automate only if rules are well defined and complete;

- Strive to make loads repetitive;

- Explicit performance goals should be set based on time provided by the cutover window;

- Make performance management part of the process.